Conversations API

Conversation intelligence is a set of techniques used to mine the actionable and useful information from conversations using machine learning. Examples include calls to action, questions asked, sentiment of the participants, and other data points that might be missed during normal dialogue. Use Symbl.ai conversation intelligence to improve, analyze, and act on conversations at scale.

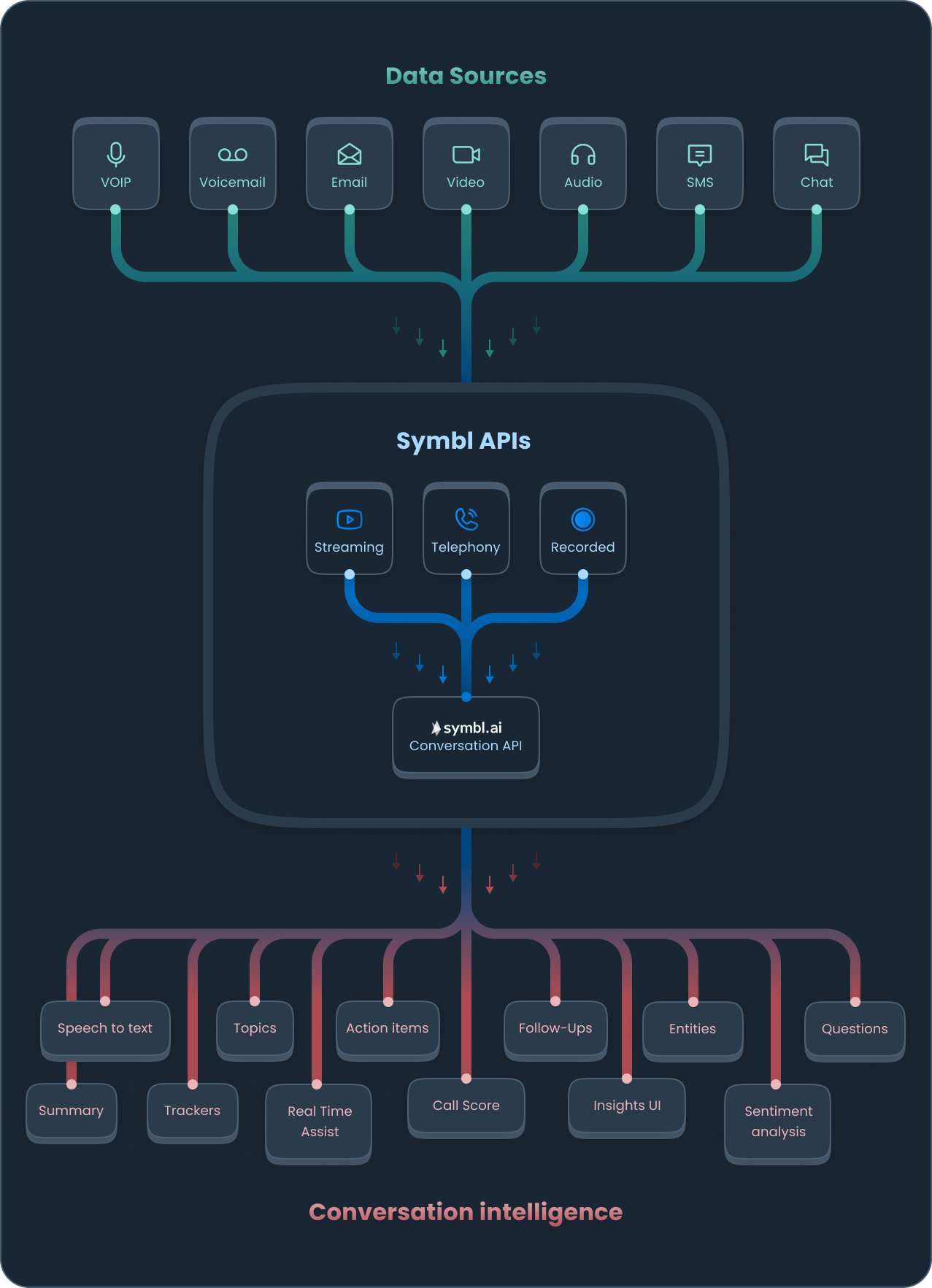

Using Symbl.ai technology, you can analyze conversations from different formats including text, audio, and video. You can process conversations in real-time or asynchronously.

You can send your conversation data to Symbl.ai in real-time via Streaming API or Telephony API . If you save a file of the conversation, you can process the file using the Async API .

After processing a conversation, you can use specialized conversation intelligence tools such as Speech to Text, Topics , Trackers, and Action Items. You can also generate a Customizable UI.

Symbl.ai data flow from original data source through Async, Streaming, or Telephony processing, through the Conversations API, resulting in Conversation Intelligence.

Speech-to-text

Symbl.ai provides access to searchable transcripts with timestamps and speaker information. The transcript is a refined output of the speech-to-text conversion.

The transcript is a convenient way to navigate through an entire conversation. It can be sorted using speaker-specific or topic-specific filters. Each insight or action item can also lead to related parts of the transcript.

You can generate transcripts in real-time for voice and video conversations or asynchronously using a recorded file. You can view and analyze transcripts using the post-conversation Summary. The post-conversation summary page lets you copy and share transcripts from the conversation.

To learn more about creating transcripts, see Convert Speech to Text.

Topics

Topics provide a quick overview of the key points of a conversation. Symbl.ai detects Topics contextually based on what is relevant in the conversation. It does not detect Topics based on the frequency of their occurrences in a conversation. Each Topic is an indication of one or more important points discussed in the conversation.

Symbl.ai assigns a weighted score to Topics, which indicates the importance in the context of the entire conversation. It does not determine topic weight by number of mentions. A point that is mentioned less frequently might have a higher importance in the conversation.

You can get Topics in real-time or asynchronously. To learn more about Topics, see Topics.

Topic hierarchy

A conversation can include multiple related Topics. You can use the Topic Hierarchy API to organize Topics for better insights and consumption. The Symbl.ai Topic Hierarchy algorithm finds a pattern in the conversation and creates global Parent Topics. Each Parent Topic can have multiple nested Child Topics.

For more information, see Topics Hierarchy.

Abstract topics (Labs)

Abstract topics help you determine recurrent themes in a conversation. For more information, see Abstract Topics (Labs).

Action items

An action item is a specific outcome recognized in a conversation that requires one or more people in the conversation to take a specific action showing a clear commitment.

For example:

“This was a great conversation, I will summarize this meeting and send a follow-up to all the stakeholders”

To learn more, see Action Items.

Comprehensive action items

The Comprehensive Action Items API is similar to the Action Items API. The Comprehensive Action Items API returns a rephrased form of the original action item message that includes the corresponding context.

Compared to action items, the comprehensive action items provide more details including references to speaker names, context in which the action item occurred, and a description of the action items.

Follow-ups

Symbl.ai can recognize if an action item requires a follow-up. A follow-up can be a general action item, or assigned to a specific person. A follow-up assigned to a person usually includes setting up a meeting or appointment.

Follow-up details include assignee, date-time ranges, entities, and are regenerated with speaker context referring to the transcription or message. The Summary UI comes with an out-of-the-box calendar integration for this type of follow-up insight.

An example: of a transcript sentence that triggers a follow-up:

- “John, let’s set a time to discuss the board updates tomorrow evening.”

To learn more, see Follow-Ups.

Questions

The Questions API recognizes any explicit question or request for information that comes up during a conversation. The question can be answered or unanswered.

Examples:

- “What features are most relevant for our use case?”

- “How are we planning to design the systems?”

- “When is the next meeting? We are schedule to meet tomorrow at noon.”

For more information, see Questions.

Redaction

Identify and redact sensitive information from transcripts using the Conversations API.

Use cases include maintaining compliance and protecting consumer data, automating workflows, and employee training.

Types of redacted output include:

- Personal Identifiable Information (PII).

- Payment Card Industry (PCI).

- Protected Health Information (PHI).

- General information such as file names, times, and URLs.

For more information, see Redaction.

Trackers

Trackers are a powerful conversation intelligence feature you can use to automatically recognize phrases and their meaning in conversations.

An individual tracker is a group of phrases identifying a characteristic or an event you want to track in conversations. You can track critical moments in a conversation in real-time or asynchronously.

Trackers don't simply look for keywords or phrases. Trackers identify the meaning of your samples to create a full-fledged tracking context that includes similar and alternative samples.

For example, “I don’t have any money” is contextually similar to “I ran out of budget” but not identical. Both phrases represent similar inherent meaning. After processing the conversation, the Trackers feature detects that the context and meaning are similar and identifies both phrases.

The Trackers feature includes a Managed Trackers Library that consists of out-of-the-box trackers in four categories: Sales, Contact Center, Recruitment, and General. Managed Trackers are curated and managed by Symbl.ai.

Sign in to the Symbl.ai Platform to select trackers from Trackers Management > Managed Trackers Library. You can also see trackers in action by completing the end-to-end process in API Explorer > Trackers.

As you process conversations, the Trackers feature makes recommendations in the form of existing Managed Trackers. Any recommendations you accept appear in the platform at Trackers Management > Your Trackers.

For more information, see Trackers. For step-by-step instructions, see Getting Started with Trackers.

Summary

You can use the Summary API to distill conversations into succinct summaries. The Summary API saves you time compared to manually extracting the highlights of a conversation. You can create a summary in real-time or after the conversation has ended. You can also create a summary for chat or email messages.

For more information, see Summary.

Pre-built summary UI

Symbl.ai provides a pre-built Summary UI to demonstrate user experiences available with conversation intelligence. The Summary UI is available as a URL that can be shared via email with selected participants. The URL can also be used to embed a link as part of the conversation history within the application.

The Summary UI provides:

-

Title and details of the conversation, including date and number of participants.

-

Names of all the participants.

-

Topics covered in the conversation in the order of importance.

-

Full, searchable transcript of the conversation. Transcripts can be edited, copied and shared.

-

Insights, action items, or questions from the transcript.

-

The Summary UI can also be customizable, for a specific use case or product requirement.

Summary UI key features include an interactive UI, white labeling to customize it for your own use, customizable URL, tuning to enable or disable individual features, and user engagement analytics to track user interactions

To learn more, see Pre-Built Experiences: Summary UI.

Sentiment analysis

Sentiment analysis interprets the general thought, feeling, or sense of an object or a situation.

The Symbl.ai Sentiment Analysis feature analyzes speech-to-text sentences and topics (or aspect). Use the Sentiment Analysis feature to get the intensity of a sentiment and suggest the sentiment type as negative, neutral, or positive.

To learn more, see Sentiment Analysis.

Conversation analytics

Conversation analytics identify the parts of a conversation that help you find a specific place in a transcript or trigger an action based on an insight. Insight categories include: question, action item, and follow-up.

For example, consider a conversation in which John and Arya talked on the phone for 60 minutes. John talked for 40 mins and Arya talked for 10 mins. No one spoke for 10 minutes because John kept Arya on hold. Conversation analytics provides speaker ratio, talk time per speaker, silence, pace, and overlap; and supply this data for monitoring and insights.

Key Features:

-

Speaker Ratio is the total ratio of one speaker compared to another.

-

Talk time per speaker.

-

Silence, the time during which none of the speakers said anything.

-

Pace is the speed at which the person spoke, in words per minute (wpm).

-

Overlap in a Conversation shows if a speaker spoke over another speaker, provided as a percentage of total conversation and overlap time in seconds.

For more information, see Conversation Analytics.

Entity Detection

The Entity Detection feature provides named-entity recognition support for your conversations. The Entity Detection feature identifies and extracts critical data from your conversations. Use the feature to:

- Simplify the way your organization detects entities across numerous conversations.

- Locate PII, PCI and PHI data that complies with PCI SSC, HIPAA, GDPR and CCPA.

- Create custom entities to support product features and workflows in your organization.

For details, see Entity Detection.

Bookmarks

Bookmarks provide a convenient way to highlight and summarize critical points in a conversation. Quickly get to key moments of a conversation and share those moments with others.

For details, see Bookmarks.

Updated 10 months ago