Live transcription from a phone call

Get a live transcription in your Node.js application by making a call to a valid phone number. In this guide, we will walk you through how to get a live transcription and real-time AI insights, such as follow-ups, action items, topics and questions of a phone call using a PSTN or SIP connection.

This application uses the Symbl JavaScript SDK which requires the symbl-node node package.

Making a phone call is also the quickest way to test Symbl’s Telephony API. It can make an outbound call to a phone number using a traditional public switched telephony network (PSTN), any SIP trunks, or SIP endpoints that can be accessed over the internet using a SIP URI.

Authentication

Before continuing, you must generate an authentication token (AUTH_TOKEN) as described in Authentication.

Getting Started

In this example we use the following variables which you must replace in the code examples for the code to work:

| Key | Type | Description |

|---|---|---|

APP_ID | String | The application ID you get from the home page of the platform. |

APP_SECRET | String | The application secret you get from the home page of the platform. |

AUTH_TOKEN | String | The JWT you get from our authentication process |

PHONE_NUMBER | String | A phone number that you want the API to connect to. Be sure to include the country code. |

To get started, you’ll need your account credentials and Node.js installed (> v8.x) on your machine.

We’ll use the Symbl module for Node.js in this guide. Make sure you have a Node project set up. If you don’t have one, you can set one up using npm init.

From the root directory of your project, run the following command to add symbl-node in your project dependencies.

$ npm i --save symbl-node

Your credentials include your appId and appSecret. You can find them on the home page of the platform.

Create a new file named index.js in your project and add the following lines to initialize the Symbl SDK:

const {sdk, SpeakerEvent} = require("symbl-node");

const appId = APP_ID;

const appSecret = APP_SECRET;

sdk.init({

appId: appId,

appSecret: appSecret,

}).then(async() => {

console.log('SDK initialized.');

try {

// You code goes here.

} catch (e) {

console.error(e);

}

}).catch(err => console.error('Error in SDK initialization.', err));

Make a call

The quickest way to test the Telephony API is to make a call to any valid phone number. The Telephony API only works with phone numbers in the U.S. and Canada.

-

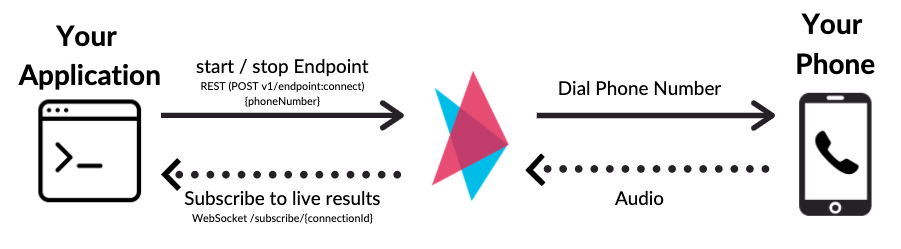

Your application uses the SDK to:

-

make a REST call to the Telephony API with the phone number details

-

subscribe to live results over a WebSocket connection

-

-

Once the call is accepted on the phone, the Telephony API starts receiving audio from the call.

-

Live transcription is streamed back to your application using the WebSocket connection.

const connection = await sdk.startEndpoint({

endpoint: {

type: 'sip', // when dialing in to SIP UAS or making a call to SIP Phone

// Replace this with a real SIP URI

uri: 'sip:[email protected]'

}

});

const {connectionId} = connection;

console.log('Successfully connected. Connection Id: ', connectionId);

const connection = await sdk.startEndpoint({

endpoint: {

type: 'pstn', // when making a regular phone call

phoneNumber: PHONE_NUMBER

}

});

const {connectionId} = connection;

console.log('Successfully connected. Connection Id: ', connectionId);

To make a phone call, call the startEndpoint with type set to pstn and a valid U.S./Canadian phone number phoneNumber. The connection will return a connectionId value.

Subscribe to the Live AI Insights

To get real-time AI insights, you must subscribe to the connection. You need to call the subscribeToConnection method in the SDK and pass the connectionId and a callback method which will be called on for every new event including the live transcription.

// Subscribe to connection using connectionId.

sdk.subscribeToConnection(connectionId, (data) => {

const {type} = data;

if (type === 'transcript_response') {

const {payload} = data;

// You get live transcription here!!

process.stdout.write('Live: ' + payload && payload.content + '\r');

} else if (type === 'message_response') {

const {messages} = data;

// You get processed messages in the transcript here!!! Real-time but not live! :)

messages.forEach(message => {

process.stdout.write('Message: ' + message.payload.content + '\n');

});

} else if (type === 'insight_response') {

const {insights} = data;

// You get any insights here!!!

insights.forEach(insight => {

process.stdout.write(`Insight: ${insight.type} - ${insight.text} \n\n`);

});

}

});

When you use this API, you can use a callback function within the subscribeToConnection function. You pass an object and declare the type of response you want in the type field.

-

transcript_responsetype is used for low latency live transcription results -

message_responsetype is used for processed transcription results in real-time. These are not the same astranscript_responsewhich are low latency but typically generate a few seconds after the processing and contain messages split by sentence boundaries. -

insight_responseis used for any insights detected in real-time

End the Call

To end the call, you should make a stopEndpoint call. The following code stops the call after 60 seconds. Your business logic should determine when the call should end.

// Stop call after 60 seconds to automatically.

setTimeout(async () => {

const connection = await sdk.stopEndpoint({ connectionId });

console.log('Stopped the connection');

console.log('Conversation ID:', connection.conversationId);

}, 60000); // Change the 60000 with higher value if you want this to continue for more time.

The stopEndpoint will return an updated connection object which will have the conversationId in the response. You can use conversationId to fetch the results even after the call using the Conversation API.

Code Example

You can find the complete code used in this guide at Get a Live Transcription with a Simple Phone Call | github.com.

Test

To verify and check if the code is working:

- Run your code:

$ node index.js

-

You should receive a phone call to the number you used in the

startEndpointcall. Accept the call. -

Start speaking in English (default language) and you should see the live transcription added to the console in real-time.

-

The call should automatically end after 60 seconds. If you end it sooner and don’t invoke

stopEndpoint, you will not receive theconversationId. If you need to access the results generated in the call, you should invokestopEndpointeven if it was ended without explicitly invokingstopEndpointbefore this point.

The example above invokes

stopEndpointafter a fixed timeout of 60 seconds. This is for demonstrating the stop functionality and it is not the recommended method of implementation for your application. In a real implementation, you should invokestartEndpointandstopEndpointas needed by the business logic of your application, i.e when you would like Symbl to start processing and stop processing.

What's next

Congratulations! You finished your integration with Symbl’s Telephony API using PSTN. Next, you can learn more about the Conversation API, SIP Integration, Post-Meeting Summary, and Active Speaker Events.

Termination due to elongated silence

If the meeting is silent for more than 30 minutes, it will be automatically terminated. The charges towards the silent minutes apply.

Updated almost 2 years ago