Pre-built UI experiences

Symbl.ai provides reusable and customizable UI components in two basic categories:

Summary UI

Symbl.ai provides a pre-built Summary UI that can be used to generate a user experience of the understanding of the conversation after it has been processed. The pre-built Summary UI is available as a URL that can be shared via email to all (or selected) participants or used to embed as a link as part of the conversation history within the application.

You can generate the following types of Summary UI:

Demo links

The Pre-built Summary UI provides the following:

- Title and details of the conversation including date, number of participants, etc.

- Names of all the participants.

- Topics covered in the conversation in the order of importance.

- Full, searchable transcript of the conversation. Transcripts can be edited, copied and shared.

- Any Insights, action items or questions from the transcript. Insights can also be edited, shared or dismissed, date/assignee for action item to be modified.

- The prebuilt summary UI can also be customizable, as per the use case or product requirement.

Key Features

- Interactive UI: The Summary UI not only provides the Conversation details and insights in a simplistic interface, but allows you to copy, click and playback video (for Video Summary UI) at various conversation points to view transcripts on-demand.

- White labeling: Customize your Summary UI by adding your own brand logo, favicon, font, color, and so on to provide a branded look and feel.

- Custom Domain: Add your own domain in the Summary UI URL for personalization.

- Tuning: Choose to tune your Summary Page with different configurations such as enabling or disabling Summary Topics, deciding the order of topics, etc.

- User Engagement Analytics: Record user interactions on your Summary UI via the popular tool Segment so that you have visibility into how your end-users are utilizing the Summary UI.

Symbl.ai React Elements

Symbl.ai JS elements helps developers embed customizable JS elements for transcription, insights and action items for both real-time and post conversation experience. These are customizable, embeddable components that can be used to simplify the process of building the experience with the desired branding, as applicable.

The Symbl.ai React elements supports:

- Live captioning

- Topics

- Action Items

- Suggestive Actions

Read more in the Symbl.ai React Elements page.

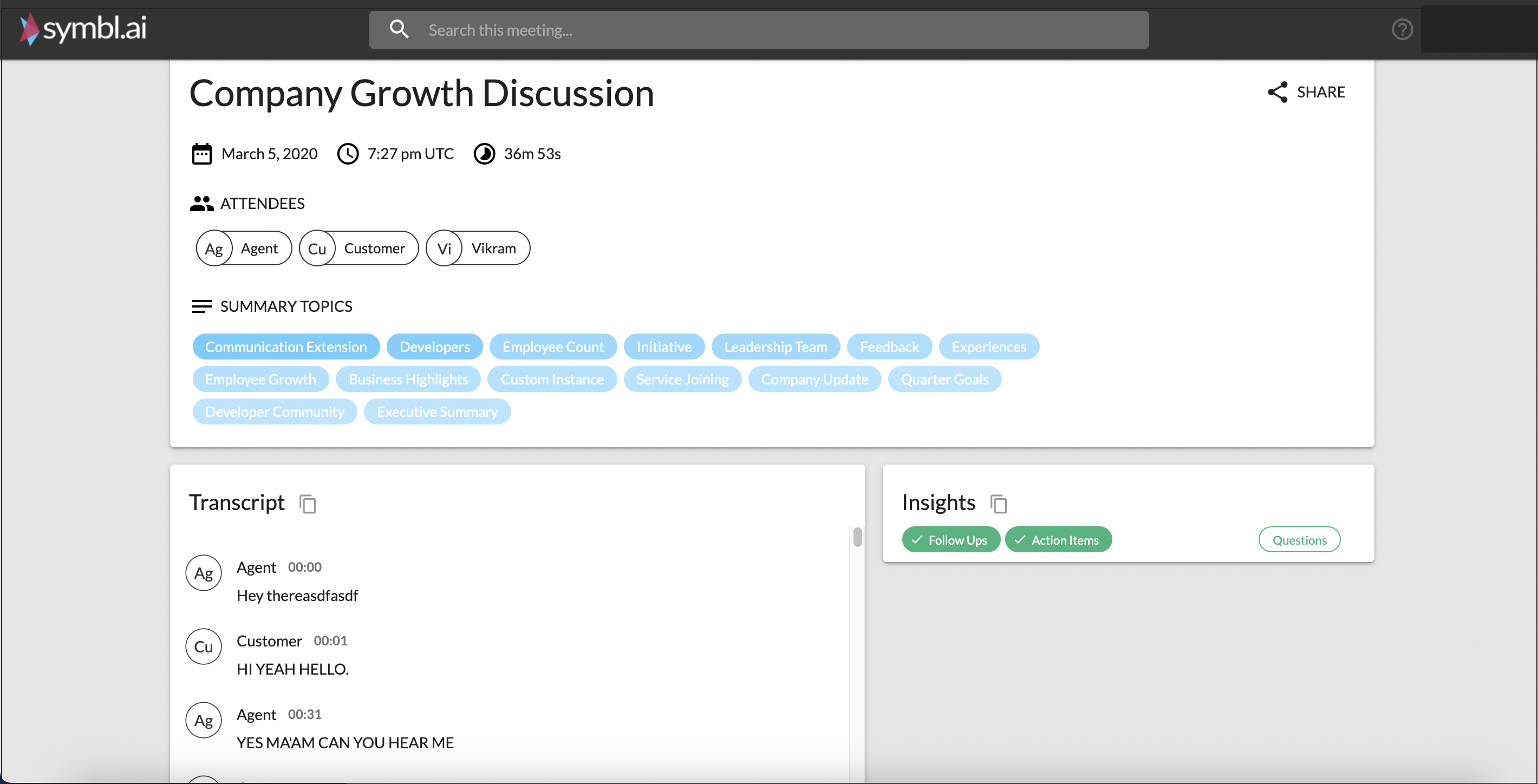

Text Summary UI

The Text Summary UI provides you with an interface that shows conversation insights such as Action Items, Topics, Questions, Follow Ups and is interactive, allowing you to select conversation transcripts, insights, and filters.

You can select to displays the timestamp where this occurred in the course of the conversation and start playback from there.

Currently, the Text Summary UI is supported for audio conversations.

The Text Summary UI displays the following details:

- Title - The title represents the subject of the meeting in a concise manner.

- Date - Date of the meeting, formatted in the standard style of MM/DD/YY.

- Time - Specific time at which the meeting occurred, formatted in Coordinated Universal Time or UTC standard.

- Duration - Total duration of the meeting.

- Attendees - A compact list of the people who attended the meeting. You can use the feature to abbreviate each attendee according to their function/position. For Example, an Agent or a Customer.

- Summary Topics - A concise list of a cluster of subjects discussed throughout the meeting.

- Transcript - Transcript of the conversation with Speaker Separation if the Speaker Diarization is enabled. You can view the transcription of the entire conversation and see what each participant spoke.

- Insights - Insights section shows you all the Conversation Intelligence such as Questions, Action Items, Topics, and Follow Ups, etc., that is being talked about in the conversation. When you click on Insights, you will be redirected to the relevant part in the transcript where the insight was detected.

API Reference

Tutorials

- Creating Text Summary UI

- Tuning your Summary Page

- White label your Summary Page

- Add custom domain to your Summary Page

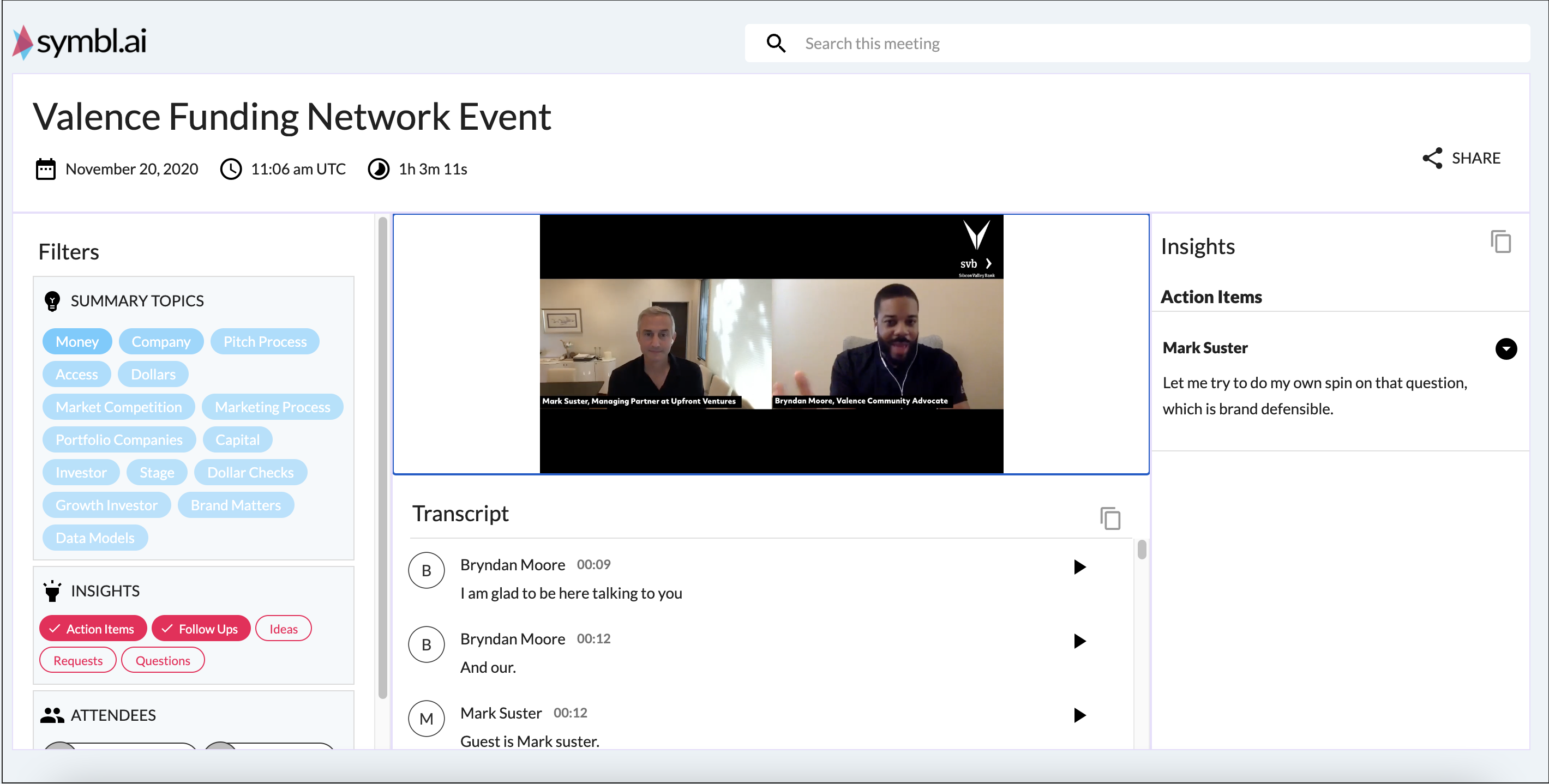

Video Summary UI

The Video Summary UI provides users the ability to interact with the Symbl elements(transcripts section, Insights, Filters) from an audio and video. It surfaces a screen where users can select key elements like topics, transcripts, and insights and the interface will surface the timestamp where this occurred and begin playback from there.

The Video Summary UI provides the following details:

- Title - The title represents the subject of the meeting in a concise manner.

- Date - Date of the meeting, formatted in the standard style of MM/DD/YY.

- Time - Specific time at which the meeting occurred, formatted in Coordinated Universal Time or UTC standard.

- Duration - Total duration of the meeting.

- Attendees - A compact list of the people who attended the meeting. You can use the feature to abbreviate each attendee according to their function/position. For Example, an Agent or a Customer.

- Summary Topics - A concise list of a cluster of subjects discussed throughout the meeting.

- Transcript - Transcript of the conversation with Speaker Separation if the Speaker Diarization is enabled. You can view the transcription of the entire conversation and see what each participant spoke.

- Insights - Insights section shows you all the Conversation Intelligence such as Questions, Action Items, Topics, and Follow Ups, etc., that is being talked about in the conversation. When you click on Insights, you will be redirected to the relevant part in the transcript where the insight was detected.

Key Features

- Video Playback: You can begin playback from a particular timestamp in the transcript by clicking on it:

- Transcript Navigation: Clicking on an Insight takes you to the location related to the insight in the transcript and begins autoplay of the video from there:

- Topic Highlights: Selecting topics highlights the topics in the Transcript and generated Insights. In the search bar you will be able to toggle to other Topics along with the ability to autoplay the video from those points as well:

- Audio file : This is supported by providing an audio file’s url to the

videoUrlparam. When an audio file is used, you will be provided a slightly different user experience -- while playing, the current speaker’s name, if available, is displayed where the video would otherwise be. Current audio formats supported are:mp3andwav.

How to Enable the Video Summary UI

You need to add a query-parameter to the existing summary URL:

showVideoSummary=true

For example:

https://example.symbl.ai/meeting/#/eyJzZXNzaW9uSWQiOiI2NTA0OTI1MTg4MDYzMjMyIiwidmlkZW9VcmwiOiJodHRwczovL3N0b3JhZ2UuZ29vZ2xlYXBpcy5jb20vcmFtbWVyLXRyYW5zY3JpcHRpb24tYnVja2V0LzE5MzE0MjMwMjMubXA0In0=?showVideoSummary=true

What if there is no video in my URL?

The videoUrl only takes precedence when there is no Video present in the UI.

API Reference

Tutorials

- Creating Video Summary UI

- Tuning your Summary Page

- White label your Summary Page

- Add custom domain to your Summary Page

Trackers and Analytics UI

The Trackers and Analytics UI provides a waveform visualization with conversation insights. The waveform highlights Topics in the timeline using color coded timestamps allowing you to get a snapshot of when they occurred in the course of the conversation. You can view Trackers with sentiment score, transcripts, speaker information, and other conversation insights described below.

See Trackers and Analytics UI sample

Currently, the Tracker and Analytics UI is supported for audio conversations.

![]()

| Description | |

|---|---|

| 1. Waveform Timeline | The waveform timeline consists of color coded timestamps to show when exactly a Topic was discussed in the conversation. |

| 2. Topics with Sentiment Score | Hover your cursor around the Topics to get the Sentiment Score applicable to that Topic. The Sentiment Score can tell you if the Topics discussed were positive or negative in nature. Read more in the Sentiment Polarity section. |

| 3. Trackers | You can view the Trackers identified in the course of the conversation. It provides details on how many times the Trackers occurred and who said it. |

| 4. Analytics | Provides an overview of speaker talk and silence ratios and words per minute. |

| 5. Transcript | Transcript of the conversation with Speaker separation if the Speaker Diarization is enabled. See Best Practices information below. |

| 6. Speaker Analytics | A timeline showing speakers talk time along with timestamps of when and who asked questions. |

To create a fully functional version of the Trackers and Analytics UI, you must first:

-

Set up Trackers

Add Trackers to your account using the platform-based Managed Trackers Library or Custom Trackers. View the Trackers associated with your account at Your Trackers. -

Enable Speaker Separation:

Make sure that the Speaker Separation step is enabled when submitting data to the Async API. Be sure to pass both “enableSpeakerDiarization=true” and “diarizationSpeakerCount=” as request parameters. Read more at Speaker Separation > Query Params.If you set these optional parameters, the Speaker Analytics component can generate high-resolution information.

-

Process a conversation and receive a conversation ID

Process a conversation using async or streaming as described at Process a Conversation > Overview. When you process a conversation, you receive aconversationIdneeded to generate Conversation Intelligence from the conversation. -

Make sure Trackers are detected

Read about the step-by-step instructions in Trackers.

Note that once the raw conversation data is processed by Symbl.ai and a conversation ID is generated, there is no way to retroactively add Trackers or enable speaker separation. In this case, you need to submit the conversation data to the Async API again for processing.

API Reference

Tutorials

Updated almost 2 years ago